Day-2 Data Store, Import and Export Using DHIS2 Web API

- Data Store

Using the dataStore resource, developers can store arbitrary data for their apps. Access to a datastore’s namespace is limited to the user’s access to the corresponding app, if the app has reserved the namespace. For example a user with access to the “sampleApp” application will also be able to use the sampleApp namespace in the datastore. If a namespace is not reserved, no specific access is required to use it.

/api/dataStore

Example:

To get all the namespace in datastore:

http://103.247.238.86:8081/dhis28/api/dataStore

Result:

[

"scorecards",

"leagueTables",

"OrgUnitManager"

]

To get Specific namespace:

Data store entries consist of a namespace, key and value

/api/26/dataStore/namespace/key

Example:

http://103.247.238.86:8081/dhis28/api/dataStore/OrgUnitManager

Result:

[

"10000002_302607null",

"10000003_302607null",

"10000004_302607null",

"10000005_302607null",

"10000006_302607null",

"10000007_302680null",

"10000008_302626null",

"10000009_302680null",

"10000010_302680null",

"10000011_302680null",

"10000012_302607null",

"10000013_302680null",

"10000014_302680",

"10000015_302654",

"10000016_302640",

....

]

To create a new key and value for a namespace:

POST /api/dataStore/namespace/key

Example:

http://103.247.238.86:8081/dhis28/api/dataStore/scorecards/DHIS2Level2AcademyBD

Result:

{

"httpStatus": "Created",

"httpStatusCode": 201,

"status": "OK",

"message": "Key 'DHIS2Level2AcademyBD' created."

}

With the json payload

{ "key1":"value1" , "key2":"value2" }

To update value for a namespace:

PUT /api/dataStore// With the json payload

{ "key1":"value1" , "key2":"value2" }

To delete key:

DELETE /api/dataStore// To delete all keys and the namespace:

DELETE /api/dataStore/

Detail: https://docs.dhis2.org/2.27/en/developer/html/webapi_data_store.html

- Import and Export Using DHIS2 Web API

General purpose endpoint for exporting multiple object types at the same time, supports both global object/fields filters, as well as type specific filters.

Mainly developed to support use-cases where you want to move a complete DHIS2 database from one instance to another, but has proven to be useful in many other cases (when you want to get multiple non-interconnected types, i.e. data elements and indicators at same time)

Metatada API which is available at: /api/metadata and /api/metadataendpoints XML and JSON resource representations are supported. Example: http://103.247.238.86:8081/dhis28/api/metadata Result:

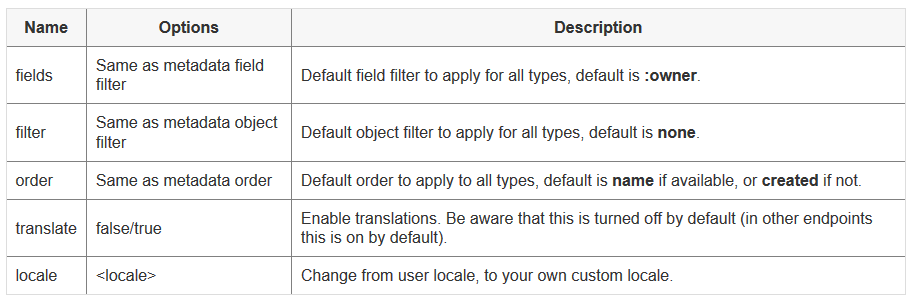

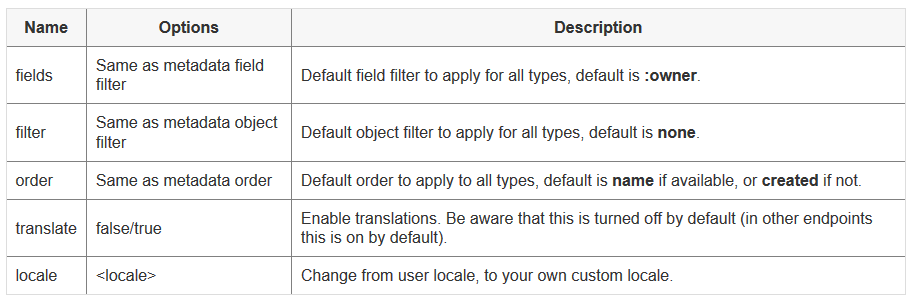

Meatadata Export Parameters

Export all metadata:curl -u user:pass http://server/api/metadataExport all metadata ordered by lastUpdated descending:curl -u user:pass http://server/api/metadata?defaultOrder=lastUpdated:descExport id and displayName for all data elements, ordered by displayName:curl -u user:pass http://server/api/metadata?dataElements:fields=id,name&dataElements:order=displayName:descExport data elements and indicators where name starts with "ANC":curl -u user:pass http://server/api/metadata?defaultFilter=name:^like:ANC&dataElements=true&indicators=true

Object filter: ?filter=property-name:operator:value (global) ?type:filter=property-name:operator:value Field filter: ?fields=property-a,property-b[property-ba,property-bb] (global) ?type:fields=property-a,property-b[property-ba,property-bb] ● Other ○ ?translate=true/false ○ ?locale=your-locale

- Import Endpoint

General endpoint for importing DXF2 metadata, generally the preferred endpoint for integration with DHIS2.

Directly linked to underlying metadata domain model, so might break from time to time, always check version upgrade notes.

Main mount at /api/metadata, but is also the underlying importer for when you POST/PUT to any other metadata endpoint /api/type

The importer supports many different import parameters, for most day to day integrations the defaults should be good enough, but its good to know a bit of what is available if you choose to use it.

○ importMode=COMMIT/VALIDATE If mode is COMMIT the payload is validated, then imported (if validation passed), if mode is VALIDATE just validate and return the report to the client. ○ atomicMode=ALL/NONE This mode switches between the legacy way of treating references, and the new style. If mode is ALL, all references must be valid, if its NONE, it will allow some invalid references and do a best effort import. ○ flushMode=AUTO/OBJECT Should be left at AUTO unless you are seeing some strange stack traces in your import. Mainly used as a debug tool to help writing JIRA issues (mode needs to be OBJECT to make sure that the stack trace is reported at the correct object). ○ More parameters available at https://docs.dhis2.org/master/en/developer/html/webapi_metadata_import.html ● All payloads are validated before any import happens, this takes into consideration a few things: ○ Schema validation: Using the information from the /api/schemas endpoint, it will validate the properties and make sure requirements like uniqueness, required, etc is fulfilled. ○ Security validation: Verifies that the current user is allowed to create/update/delete the object currently being imported.

○ Mandatory attributes: Verifies that all mandatory metadata attributes are there (and has a valid type).

○ Unique attributes: verifies that all unique metadata attributes has a unique value.

- Data Store

Using the dataStore resource, developers can store arbitrary data for their apps. Access to a datastore’s namespace is limited to the user’s access to the corresponding app, if the app has reserved the namespace. For example a user with access to the “sampleApp” application will also be able to use the sampleApp namespace in the datastore. If a namespace is not reserved, no specific access is required to use it.

/api/dataStore

Example:

To get all the namespace in datastore:

http://103.247.238.86:8081/dhis28/api/dataStore

Result:

[

"scorecards",

"leagueTables",

"OrgUnitManager"

]

To get Specific namespace:

Data store entries consist of a namespace, key and value

/api/26/dataStore/namespace/key

Example:

http://103.247.238.86:8081/dhis28/api/dataStore/OrgUnitManager

Result:

[

"10000002_302607null",

"10000003_302607null",

"10000004_302607null",

"10000005_302607null",

"10000006_302607null",

"10000007_302680null",

"10000008_302626null",

"10000009_302680null",

"10000010_302680null",

"10000011_302680null",

"10000012_302607null",

"10000013_302680null",

"10000014_302680",

"10000015_302654",

"10000016_302640",

....

]

To create a new key and value for a namespace:

POST /api/dataStore/namespace/key

Example:

http://103.247.238.86:8081/dhis28/api/dataStore/scorecards/DHIS2Level2AcademyBD

Result:

{

"httpStatus": "Created",

"httpStatusCode": 201,

"status": "OK",

"message": "Key 'DHIS2Level2AcademyBD' created."

}

With the json payload

{ "key1":"value1" , "key2":"value2" }

To update value for a namespace:

PUT /api/dataStore// With the json payload

{ "key1":"value1" , "key2":"value2" }

To delete key:

DELETE /api/dataStore// To delete all keys and the namespace:

DELETE /api/dataStore/

Detail: https://docs.dhis2.org/2.27/en/developer/html/webapi_data_store.html

- Import and Export Using DHIS2 Web API

General purpose endpoint for exporting multiple object types at the same time, supports both global object/fields filters, as well as type specific filters.

Mainly developed to support use-cases where you want to move a complete DHIS2 database from one instance to another, but has proven to be useful in many other cases (when you want to get multiple non-interconnected types, i.e. data elements and indicators at same time)

Metatada API which is available at: /api/metadata and /api/metadataendpoints XML and JSON resource representations are supported. Example: http://103.247.238.86:8081/dhis28/api/metadata Result:

Meatadata Export Parameters

Export all metadata:curl -u user:pass http://server/api/metadataExport all metadata ordered by lastUpdated descending:curl -u user:pass http://server/api/metadata?defaultOrder=lastUpdated:descExport id and displayName for all data elements, ordered by displayName:curl -u user:pass http://server/api/metadata?dataElements:fields=id,name&dataElements:order=displayName:descExport data elements and indicators where name starts with "ANC":curl -u user:pass http://server/api/metadata?defaultFilter=name:^like:ANC&dataElements=true&indicators=true

Object filter: ?filter=property-name:operator:value (global) ?type:filter=property-name:operator:value Field filter: ?fields=property-a,property-b[property-ba,property-bb] (global) ?type:fields=property-a,property-b[property-ba,property-bb] ● Other ○ ?translate=true/false ○ ?locale=your-locale

- Import Endpoint

General endpoint for importing DXF2 metadata, generally the preferred endpoint for integration with DHIS2.

Directly linked to underlying metadata domain model, so might break from time to time, always check version upgrade notes.

Main mount at /api/metadata, but is also the underlying importer for when you POST/PUT to any other metadata endpoint /api/type

The importer supports many different import parameters, for most day to day integrations the defaults should be good enough, but its good to know a bit of what is available if you choose to use it.

○ importMode=COMMIT/VALIDATE If mode is COMMIT the payload is validated, then imported (if validation passed), if mode is VALIDATE just validate and return the report to the client. ○ atomicMode=ALL/NONE This mode switches between the legacy way of treating references, and the new style. If mode is ALL, all references must be valid, if its NONE, it will allow some invalid references and do a best effort import. ○ flushMode=AUTO/OBJECT Should be left at AUTO unless you are seeing some strange stack traces in your import. Mainly used as a debug tool to help writing JIRA issues (mode needs to be OBJECT to make sure that the stack trace is reported at the correct object). ○ More parameters available at https://docs.dhis2.org/master/en/developer/html/webapi_metadata_import.html ● All payloads are validated before any import happens, this takes into consideration a few things: ○ Schema validation: Using the information from the /api/schemas endpoint, it will validate the properties and make sure requirements like uniqueness, required, etc is fulfilled. ○ Security validation: Verifies that the current user is allowed to create/update/delete the object currently being imported.

○ Mandatory attributes: Verifies that all mandatory metadata attributes are there (and has a valid type).

○ Unique attributes: verifies that all unique metadata attributes has a unique value.